NNadir

NNadir's JournalWidespread Atmospheric Tellurium Contamination in Industrial and Remote Regions of Canada.

The paper in the recent primary scientific literature that I will discuss in this post has the same title as the post itself. It is here:

Widespread Atmospheric Tellurium Contamination in Industrial and Remote Regions of Canada. (Jane Kirk et al, Environ. Sci. Technol., 2018, 52 (11), pp 6137–6145)

The first sentence of the abstract says it all basically:

The introductory text from the full paper states it more completely:

By the way, if you're concerned about the tellurium in solar cells - most likely you're not because you've heard again and again and again and again and again ad nauseum that solar cells are "green" - don't be. Although the toxicology of tellurium is real, particularly in acid exposure owing to the formation of H2Te gas, it's toxicology is dwarfed by the other component of "green" solar cells, cadmium.

The authors note that a planetary "tellurium cycle" has never been investigated to their knowledge, and so they set out to begin building one so at least in Canada.

They note that in seawater, the concentration of tellurium is on the order of 5-40 nanograms per liter, which is between two and three orders of magnitude smaller than natural concentration of uranium in seawater, generally taken to be 3.4 micrograms per liter.

This is because of the formation of iron and manganese nodules which enrich tellurium by a factor of 50,000 and drop it on the seafloor.

Thus when the world runs out of tellurium, given the extremely low - and thus environmentally suspect - energy to mass ratio of solar cells, cadmium telluride solar cells will lose their "renewable" status, if in fact, they ever had one.

Don't worry, be happy. Solar cells, as I've been hearing my whole adult life - and I'm not young - will save the world: Regrettably long after I, and all the people who have informed of this happy fact with such blithe confidence, will be dead.

The authors note that natural tellurium flows exist, primarily volcanoes and weathering of rocks with riverine transport, but that their estimates of anthropogenic sources effectively doubles the size of the flow:

They then describe their means of measurement:

The obtain sediment cores from the deepest parts of various Canadian Lakes, and date the cores by use of Cesium-137 (nuclear testing fallout).

The samples are microwave digested in hot aqua regia, a mixture of hydrochloric and nitric acid and analyzed using a modern Agilent 7700x ICP/MS.

The map in the paper gives a feel for the findings and the geography of the testing:

The caption:

Some results:

Te concentrations in lake sediment were generally steady and low (<0.02—0.07 mg kg–1) in rural areas of Alberta (Battle, Pigeon; Figure 1a) and in the Athabasca Oil Sands region of Northern Alberta (both near oil sands industrial development: NE20, SW22, far from 2014-Y6A, and RAMP-271; Figure 1b). Near coal mining (post-1880) and combustion activities in Southern Saskatchewan near the city of Estevan (Figure 1c), sediment Te concentrations were only above detection limits after the advent of local, small-scale coal-fired generation (?1910). Increased sediment Te concentrations observed after ?1960 are coincident with the advent of larger generating facilities (950 MW).(28) Like fellow group 16 elements S and Se, Te is highly enriched in coal combustion aerosols (EF ? 104), particularly in the <2 ?m particle fraction (EF ? 106).(15,29) A decline in sediment Te seen in the ?1980s may reflect early experiments in carbon capture, facility downtime due to refurbishments, and capacity reductions due to insufficient cooling water (1988; major drought), which occurred during this period.(30) Overall, the Estevan Te record remains confounded by the high mass accumulation rates, which dilute atmospheric deposition, and incomplete characterization of the natural baseline (Figure 1c).

Near metal smelters at Flin Flon and Thompson, Manitoba anthropogenic atmospheric Te deposition is obvious (Figure 1d-g). At Flin Flon (Figure 1d), with >100-fold increases in Te concentration observed after the opening (1930) of the Cu–Zn smelter. This facility was formerly Canada’s largest Hg point source, and as seen for Hg,(19) there is a strong association between proximity to the smelter and higher sediment Te concentrations (Figure 1d, Figure S3). This is not unexpected as Te is often associated with the gold content of volcanogenic massive sulfide deposits mined near Flin Flon.(31) Moreover, world Te production is mainly a byproduct from copper refinery anode sludges,(2,3) with Flin Flon being one of the early producers of Te starting in 1935.(32) Using the method previously used for Hg,(19) we estimate the inventory of anthropogenically sourced Te deposited within a 50 km radius of the Flin Flon smelter at 72.2 t (see Figure S3) over its operational history (1930–2010). Other major copper refining centers in the world likely show similarly enhanced Te deposition surrounding them.

Twenty-five Flin Flon area mines have contributed ore containing 3.4 × 106 t of copper(33) to the smelter, yielding an emission factor of 21 g of Te atmospherically deposited near Flin Flon per t of Cu processed (72.2 t Te/3.4 × 106 t Cu = 21 g Te/t Cu). As net 1900–2010 global Cu production(34) (minus production from recycling) is 451 × 106 t, we estimate that 9,500 t of Te has been deposited near Cu smelters globally. As net global refined Te production(2) (1940–2010) is estimated at 11,000 t, Te emissions to air from Cu smelters is both a large source of Te contamination and a very large loss in potential Te production. This assumes the Flin Flon smelter process and the trace element composition of the Volcanogenic-Massive Sulfide (VMS) deposits exploited at Flin Flon are comparable to other 20th century Cu producers. This appears reasonable considering current information, which while limited indicates porphyry Cu deposits (dominant global Cu and Te source) have an equivalent Te content to VMS Cu deposits.(35)

Some measurement of the enrichment in the lake cores of various elements connected with mining:

The caption:

Average annual depositions into the Experimental Lakes Area, a remote region of Canada:

The caption:

Some conclusions from the paper:

The low apparent settling velocity for Te (similar to macronutrients; C, N, and P) despite its high particulate matter affinity(10,48) implies that some process(s) are acting within the aquatic environment slowing its apparent descent, possibly significant biological Te uptake and reprocessing. While Te is normally rare in the environment, it is highly toxic for most bacteria, with effects seen at concentrations 100× lower than required to produce toxic effects for more common elements of concern (Se, Cr, Hg, and Cu).(7) As Te utilization and potential human and environmental exposure has greatly increased in the past decade and is likely to increase further, it would be prudent to acquire a better understanding of Te interactions within the environment.

I'm not sure it would be "prudent." Couldn't we just declare solar cells "green" and forget about it?

Have a nice day tomorrow.

The High Molecular Diversity of Extraterrestrial Organic Matter in the Murchinson Meteorite.

The paper I will discuss in this post goes back a few years, and concerns the reanalysis of the Murchinson Meteorite, one of the most interesting and important meteorites ever analyzed - because of its implications for the origin of life - 40 years after it fell and was discovered in Australia. The paper is this one: High molecular diversity of extraterrestrial organic matter in Murchison meteorite revealed 40 years after its fall (Phillipe Schmitt-Kopplin et al PNAS February 16, 2010. 107 (7) 2763-2768)

The paper is happily open sourced, at least, apparently at the link provided.

The Murchinson Meteorite is of special interest because it contains a large number of amino acids, as do many other extraterrestrial objects, and, of course, as all living things because they are the constituents by which proteins are made. What is different about the amino acids in the Murchinson Meteorite is that many - but not all - of the amino acids are chiral; that is they exhibit the property of being isolated from their mirror images.

Some very basic organic chemistry for those who do not know it:

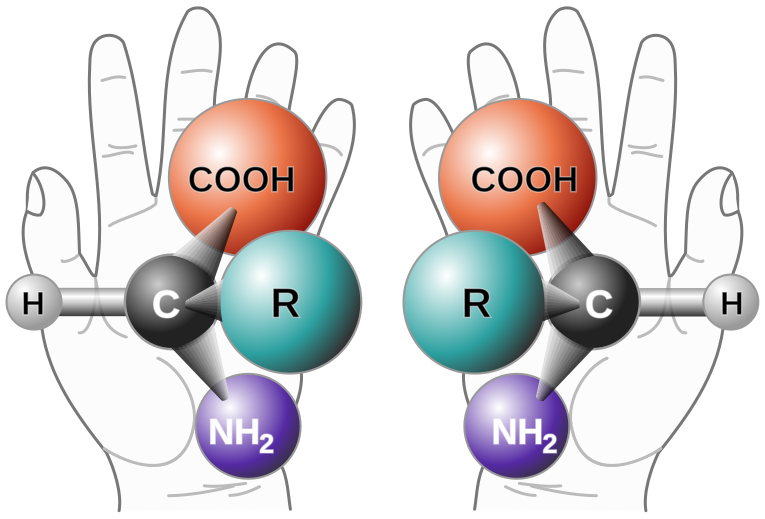

With one major exception, glycine, and a few minor exceptions, all of the amino acids in living things exhibit this property, which is sometimes called "handedness" since hands are mirror images of one another. This graphic from Wikipedia shows the property nicely:

In this picture, three dimensional examples - except if "R" is hydrogen - of the amino acids drawn cannot be superimposed upon one another; they are different molecules, called enantiomers, again, mirror images, featuring the same molecular connectivity but different arrangements in space.

One cannot in general make one enantiomer in the absence of the other in the lab unless one already has some reagent in the reaction medium which is also chiral and chirally pure. (Even with such reagents, the synthesis of a pure enatiomer can be problematic.) A mixture of two enantiomers in exactly a 50:50 mixture is otherwise obtained; we call such a mixture a "racemate." A pure single enantiomer, or a mixture in which is nearly or partially purified with one enantiomer dominating the other is said to be "optically active," since chiral compounds cause plane polarized light to rotate.

Thus chirality needs to have an origin; but one of the great scientific mysteries to still remain is "what is the origin of chirality?" If the origin of chirality is explained, it is presumably easier to explain the origin of life. For many years the origin of chirality was thought to have originated on Earth, but the Murchinson meteorite, where some of the amino acids are present in chiral excess, suggests, surprisingly at the time, that chirality can originate and is known in outer space.

For some time, it was thought that the meteorite was contaminated by terrestrial amino acids when it landed, although there were over 80 different amino acids in the meteorite, whereas living things are generally, again with some exceptions, limited to 20 amino acids, and - another mystery - DNA codes for only 20, with only one known exception, an amino acid known as selenometionine known in certain bacteria.

The fact that the amino acids in the meteorite was in fact extraterrestrial was suggested by the fact that certain "coded" amino acids were missing and again, that there were so many unusual amino acids.

The matter was settled when it was discovered that the isotopic distribution of the constituent atoms in the meteorite, including those in the amino acids was typical of extraterrestrial space and not Earth: Isotopic evidence for extraterrestrial non- racemic amino acids in the Murchison meteorite (Engle and Macko, Nature volume 389, pages 265–268 (18 September 1997))

The authors of the paper cited at the outset here, who reanalyzed parts of the meteorite 40 years after it fell, using more advanced instrumentation than was originally available to the first scientists to analyze it, remark that the focus on amino acids missed something, which was the analysis of things other than amino acids.

From their introduction:

..

...all previous molecular analyses were targeted toward selected classes of compounds with a particular emphasis on amino acids in the context of prebiotic chemistry as potential source of life on earth (10), or on compounds obtained in chemical degradation studies (11) releasing both genuine extractable molecules and reaction products (11–15) often difficult to discern unambiguously.

Alternative nontargeted investigations of complex organic systems are now feasible using advanced analytical methods based on ultrahigh-resolution molecular analysis (16). Electrospray ionization (ESI) Fourier transform ion cyclotron resonance/mass spectrometry (FTICR/MS) in particular, allows the analysis of highly complex mixtures of organic compounds by direct infusion without prior separation, and therefore provides a snapshot of the thousands of molecules that can ionize under selected experimental conditions (17).

Here we show that ultrahigh-resolution FTICR/MS mass spectra complemented with nuclear magnetic resonance spectroscopy (NMR) and ultraperformance liquid chromatography coupled to quadrupole time-of-flight mass spectrometry (UPLC-QTOF/MS) analyses of various polar and apolar solvent extracts of Murchison fragments demonstrate a molecular complexity and diversity, with indications on a chronological succession in the modality by which heteroatoms contributed to the assembly of complex molecules. These results suggest that the extraterrestrial chemical diversity is high compared to terrestrial biological and biogeochemical spaces.

Some pictures from the paper:

The caption:

Progressive detailed visualization of the methanolic Murchison extract in the ESI(?

The authors extracted different classes of the compounds by the use of differing solvents:

The caption:

Extraction efficiency of the solvents. (A) Number of total elemental compositions found in ESI(?

The caption:

The caption:

Distribution of mass peaks within the CHO, CHOS, CHNO, and CHNOS series for molecules with 19 carbon atoms. CHO and CHOS series exhibit increasing intensities of mass peaks for aliphatic (hydrogen-rich) compounds, whereas CHNO and CHNOS series exhibit a slightly skewed near Gaussian distribution of mass peaks with large occurrences of mass peaks at average H/C ratio. The apparent odd/even pattern in the and CHNOS series denotes occurrence of even (N2) and odd (N1,3) counts of nitrogen atoms in CHNO(S) molecules in accordance with the nitrogen rule (Fig. S8E).

Since this extremely high resolution FTICR/MS (Fourier Transform Ion Cyclotron Resonance Mass Spectrometer) was utilized in a flow injection fashion, it is not possibly to discern the precise nature of these molecules; it only indicates that there are a wide variety of them. (Some Triple TOF analysis was performed using LC's, but there isn't that much detail in the paper.)

I won't quote any more from the paper; the interested reader can read it on line.

A scientist reader who is interested in the resolution is advised to look in the supplemental info associated with the paper. It also contains some Van Krevelen diagrams, which were originally developed to describe the sources of dangerous fossil fuels, woody matter being responsible for dangerous coal and dangerous natural gas; algae giving rise to dangerous petroleum.

There's a lot of absurd stuff about space aliens that flies around among the increasingly loud fringes. No, space aliens did not build the pyramids and Stonehenge. But it is possible that life is a natural outgrowth of the basic chemistry of carbon, and it is possible that many of the molecules of life, and maybe even life itself, did not originate on Earth.

It may be a matter of quasi-faith, but since we are so hell bent on destroying this planet, it's a comforting thought to think that life might well, and probably does, exist elsewhere in the universe.

Have a nice hump day.

Bringing back the Polio virus to cure brain cancer.

The paper to which this post refers is this one: Recurrent Glioblastoma Treated with Recombinant Poliovirus (Darell D. Bigner, M.D., Ph.D et al New England Journal of Medicine DOI: 10.1056/NEJMoa1716435 June 26, 2018)

My mother died from a brain tumor, and although decades have passed, it never really goes away.

There was no treatment. I'm not sure there'd be one now.

As it happens, my mother-in-law is one of the last Americans to have contracted Polio. She's still alive, and suffers from what is happily becoming increasingly unknown, Post Polio syndrome, a syndrome involving intense pain.

The anti-science crowd of course, opposes vaccination, and from what I understand, some of the ignorance spread by a scientifically illiterate freak who is famous for taking off her clothes for a stupid magazine for puerile pubescent males and males who never grew up, and not famous for having ever made an intelligent remark in her useless life, has oozed out into the rare parts of the world where Polio is still known, preventing the elimination of this disease.

One plane trip by an infected person can bring it all back. Congratulations, naked asshole.

Another anti-science crowd opposes genetic engineering and this paper reports on genetic engineering.

The polio virus attacks nerve cells. Brain cancer is rogue nerve cells, and as many people know, cancer cells - most often genetic mutants in their own right - do display many of the proteins that normal cells do.

The target protein for the polio virus is a protein known as CD155. Apparently in brain cancer cells this particular protein is greatly upregulated.

The virus has been re-engineered to attach to this protein, and thus to stimulate an immune response to cells displaying an excess of CD155.

This graphic, written in the somewhat depressing format of mean survival years, says something.

Some text from the paper:

Overall Survival among Patients Who Received PVSRIPO and Historical Controls.

However, overall survival among the patients who received PVSRIPO reached a plateau beginning at 24 months, with the overall survival rate being 21% (95% CI, 11 to 33) at 24 months and 36 months, whereas overall survival in the historical control group continued to decline, with overall survival rates of 14% (95% CI, 8 to 21) at 24 months and 4% (95% CI, 1 to 9) at 36 months (Figure 1). A sensitivity analysis evaluating the effect of including patients in the historical control group who only underwent biopsy revealed that their inclusion had no effect on survival estimates (Table 1, and Fig. S3 in the Supplementary Appendix). In comparison, the use of NovoTTF-100A in patients with recurrent glioblastoma led to an overall survival rate of 8% at 24 months and of 3% at 36 months. It is too early to evaluate our statistical hypothesis of survival at 24 months, because only 20 of the 31 patients at dose level ?1 were treated with PVSRIPO more than 24 months before the data-cutoff date of March 20, 2018.

Because patients who have tumors with the IDH1 R132 mutation are thought to have a survival advantage, we examined whether long-term survivors who have tumors with the IDH1 R132 mutation disproportionately contributed to the overall survival in the entire group. Survival analyses involving only the patients who received PVSRIPO whose tumors were confirmed to have nonmutant IDH1 R132 (Table 1) revealed a median overall survival of 12.5 months among the 45 patients with nonmutant IDH1 R132 and 12.5 months among all 61 patients who received PVSRIPO. Moreover, the overall survival rate at 24 months and 36 months was 21% among the 45 patients with nonmutant IDH1 R132 and among all 61 patients who received PVSRIPO. These findings are consistent with reports that IDH1 R132 status has no bearing on survival among patients with recurrent glioblastoma.20

...

In this clinical trial, we identified a safe dose of PVSRIPO when it was delivered directly into intracranial tumors. Of the 35 patients with recurrent WHO grade IV malignant glioma who were treated more than 24 months before March 20, 2018, a total of 8 patients remained alive as of that cutoff date. Two patients were alive more than 69 months after the PVSRIPO infusion. Further investigations are warranted.

This is not a comprehensive "one size fits all" cure, but it's something.

It's cool that a virus that caused so much pain in my family can be reengineered to address a disease that also caused me and my family so much pain.

I thought it interesting, and decided to point out this promising advance in medical science.

Harnessing Clean Water from Power Plant Emissions

The scientific paper I will discuss in this post is this one, from which the title of the post itself is taken:

Harnessing Clean Water from Power Plant Emissions Using Membrane Condenser Technology (Park, et al ACS Sustainable Chem. Eng., 2018, 6 (5), pp 6425–6433)

Here is the introductory graphic provided with the paper:

The caption:

One of the most exigent issues connected with climate change and other aspects of our generation's contempt for all future generations, is water. One aspect of this problem derives from lack of access to clean and safe fresh water, owing to chemical and elemental pollution of drinking and agricultural water, and the other has to do with seawater, which owing to rising seas is causing intrusion of salts into previously available groundwater, not to mention killing people in extreme weather events and tectonic events, an example being the 2004 Indonesian quake, which killed about a quarter of a million people, and the 2011 Sendai/Fukushima quake, where 20,000 people died from seawater, not that anyone gives a rat's ass about people killed by seawater.

There are really not many viable solutions being actively pursued to prevent the rise of the seas; in fact there are none, but being - at the expense of producing an oxymoron - a "cynical optimist" I often consider some, the most challenging being the geoengineering task of removing the dangerous fossil fuel waste carbon dioxide that our generation has criminally dumped into our favorite waste dump, the planetary atmosphere.

Another option also crosses my mind from time to time, and that is removing water from the seas and storing and/or using it on dry land, including land parched by climate change. This obviously involves desalination. I've lived through a number of profound droughts in the regions in which I've lived, both in California where the effort to "do my part" involved flushing my toilet with shower water collected in buckets, and here in New Jersey, where it involved watching trees die. Always in a drought in a region near the sea, you'll run across people who will say "Why don't 'they' just desalinate seawater."

The answer to that question should be obvious, but somehow isn't to most people who blithely refer to "they" rather than "we:" It takes energy, lots of energy to desalinate water.

The proportion of energy obtained from dangerous fossil fuels on this planet is rising, not falling. In the "percent talk" often utilized by defenders of the so called "renewable energy" industry, in the proportion of primary energy obtained by the combustion of dangerous fossil fuels in the 21st century has risen from 80% in the year 2000 to 81% in the year 2016.

In "percent talk" a 1% increase in the use of dangerous fossil fuels seems rather modest, but in honest representations, it's rather dire. In the year 2000 world energy consumption was 420.15 exajoules; in 2016 it was 576.10 exajoules. This "one percent" increase therefore represents an overall increase of 129.71 exajoules, which - to put in perspective - is more energy than is utilized by the entire United States for all purposes, which by appeal to EIA data, consumed in 2017 consumed 103.09 exajoules of primary energy.

IEA 2017 World Energy Outlook, Table 2.2 page 79 (I have converted MTOE in the original table to the SI unit exajoules in this text.)

US Primary Energy Consumption Flow Chart

(The United States appears to achieved modest increases in energy efficiency, although on serious reflection, one wonders whether this increase in efficiency simply represents the export of energy intensive manufacturing operations to countries with less onerous environmental and labor regulations, which although it represents an ethical tragedy - not that many people care about ethics - certainly represents a profitable approach for those who think the end of all human activity should be money.)

I take and have taken a lot of flak here and elsewhere for my unshakable conviction that nuclear energy is the only environmentally sustainable form of energy available to humanity. My goal is not to be popular - I'm not - but rather to be informed and reasonable. The latter comes at the expense of the former.

Although in the United States and elsewhere, nuclear energy has been a very successful enterprise that has (worldwide) saved close to 2 million lives, it is, as currently practiced, nowhere near environmentally optimized, chiefly because the technology under which it operates was essentially developed in the 1950's and 1960's, a time in which - unlike today - engineers and scientists were highly respected on both ends of the political spectrum. (In my opinion, the further one is from the center of the political spectrum, the greater is one's contempt for scientists and engineers.) The chief environmental impact of the nuclear industry as currently practiced is thermal pollution.

The chief means of reducing the thermal impact of nuclear energy, in my opinion, would be to exploit modern advances in materials science to raise the temperature of reactors by an order of magnitude, as counter intuitive as this might seem to people with no knowledge of the laws of thermodynamics, an effort that is being explored in the academic nuclear wilderness even if the general public is getting more absurd in its thinking about energy and more contemptuous of scientists and engineers.

But even existing nuclear facilities and nuclear technology might be improved with respect to the environmental impact, which will shortly bring me to the paper cited at the opening of this post.

It can be shown that the thermal efficiency of all American nuclear reactors in the United States in 2017 was 32.875%. This is slightly less than the traditional value given for thermal plants in the US, 33%, but as temperatures climb - as they are obviously doing because of climate change - the thermal efficiency of all thermal power plants will fall, since efficiency is a function of the temperature of environmental thermal reservoir, in this case river, lake or seawater, which are, of course, a function of the weather. (Combined cycle dangerous natural gas plants have considerably higher thermal efficiency than other thermal plants, and can approach 60%, although this thermal efficiency can be severely degraded if the plant is temporarily shut down because the wind is blowing for a few hours and the sun is shining. I envision combined cycle nuclear plants with even higher efficiency.)

The preliminaries out the way, let me now reproduce the opening paragraph of the paper cited at the opening, detailing the environmental cost of thermal plants:

The evaporated water (i.e., white plumes) also poses several downsides such as visual pollution, frost damage, and corrosion of chimneys and stacks. The current practice now is to intentionally heat up the emission stack to avoid corrosion,4 which consumes additional energy. If the evaporated water can be effectively recovered, it can be a fruitful source of distilled water and latent energy, and it can relieve the exacerbating energy?water collisions, particularly during drought or hot weather. In addition, the technology can be valuable to other industries that employ water-cooling systems such as steel, semiconductors, and pulp industry.

Obviously much of the introduction here refers to the waste dumping devices used for dangerous fossil fuel plants, smokestacks, which are generally corroded by the fossil fuel waste which ought to give one pause to reflect on what dangerous fossil fuel waste does to lungs as opposed to bricks. However nuclear power plants which - despite so much horseshit thrown around about so called "nuclear waste" - are observed to successfully store their valuable by products on site for indefinitely long periods - do consume considerable amounts of water. Now, some of this water is recovered in the form of rain on land, but a considerable portion is not; it falls into the sea and is lost.

The paper reviews existing technologies for the recovery of water, and notes that many of them - heat exchangers for example - provide low quality water, while others, the use of glycols for example, incur an energy penalty that makes them self defeating. The focus of the paper is on the development of ceramic membranes to recover water.

The authors produce a graphic showing the options for designing these types of devices:

The caption:

The focus of their paper is optimizing the type of membrane described by figure (b) in the graphic, the transport membrane condenser which they refer to as "TMC" throughout the rest of the paper:

They note that it is important to consider the thermodynamics of this process, and comment on this aspect in an honest assessment of the energy penalty associated with water recovery, which cannot be eliminated but can be significantly reduced:

Their ceramic membrane they designate as KRICT100 and they compare it with a commercial ceramic membrane identified as HYFLUX20. They note that most commercial membranes already in use (most probably in smokestacks) are organic polymers, the long term stability of which is not expected to be high meaning that they will incur an environmental and economic penalty when they require replacement: The longevity of devices affects not only the cost of their use, but also their environmental impact. (This is just one of the reasons that the wind industry sucks.)

Here's some microscopic views of the two materials:

The caption:

Here is the characterization of the two materials in terms of pore size distribution:

The caption:

The authors product obviously demonstrates far better control over the distribution of pore sizes when compared with the commercial product, although it's not clear that this advantage can be maintained upon scale up.

They test the performance with a laboratory set up described by this schematic graphic.

The caption:

There may be a graphic error here, or else I'm going color blind: I can't see blue "cool" lines, but no matter. One can figure out where they are supposed to be. The science is good even if the proof reading isn't and the graphics aren't.

For thermodynamic reasons, the exterior temperature of the materials is apparently an important factor, and ceramic membranes perform in a superior form to the organic polymers commonly in use today:

A graphic on this subject:

The caption:

Some commentary from the text of the paper on this factor is probably appropriate:

Therefore, from the performance perspective, it certainly is more effective to utilize ceramic membranes for membrane condenser applications. However, ceramic membranes are brittle, rendering them difficult to handle in large scale. On the other hand, polymeric membranes exhibit relatively low thermal stability but can be more cost-effective.

These scientists are doing what responsible scientists should always do, point to the limitations associated with their work.

As it happens, in connection with other interests I have that have little connection with water recovery, I have been studying ceramic materials and considering some of the properties of composites that may address some of the concerns about large scale and brittleness here, although I am not competent enough in this area to assert that this is, in fact, the case.

The authors note that in any case, the properties of ceramic vs. polymeric membranes require opposing morphology:

… For ceramic membranes, lower porosity is preferred yet has negligible effect on the membrane temperature because of its high thermal conductivity. Instead, more focus can be placed on controlling the membrane pore size to improve the condensed water quality.

Here is figure 10:

The caption:

The focus of this paper has been largely on the dirtiest energy utilized by humanity which is also, by far, the largest form it uses, dangerous fossil fuel based energy. The commonly held opinion that dangerous natural gas, among the three dangerous fossil fuels is "almost" clean is a fantasy which represents violence against all future generations.

It is not enough to oppose Trump's violence against the children of immigrants - as all decent people do - while ignoring the state of the world in which they will ultimately live, with and without the activities of racist American Presidents like the President we have now. It is not enough. We must work to do better.

The applicability of the work described here, in present and future manifestations, has real applicability for clean energy, clean energy being represented by one and only one form of energy, nuclear energy.

If the coolant is seawater devices such as this represent effective desalination devices.

Now there are definite risks associated with desalination and I'm definitely not representing them as a panacea of any sort, nor representing that they can ultimately sustain humanity in the face of clear reductions in the carrying capacity of the entire planet. Some of these risks include disruptions to the thermohaline circulation patterns, which may trigger disastrously fast climatic fluctuations which are known to have occurred in the past, for example, Dansgaard-Oeschger cycles.

Still the risk is worth weighting against other risks, both to humanity and the planet.

I have argued here and elsewhere that uranium is essentially inexhaustible because of the presence of nearly 5 billion tons of this element in the earth's oceans, an amount that can never be reduced because of the geochemical circulation of the element for so long as an oxygen atmosphere persists. (Humanity will, of course, be irrelevant should oxygen cease to be present in the atmosphere, if, for example, we completely destroy the oceans, a possibility that seems not to be out of the question.) Uranium flows can also be captured in rivers, particularly should we ever restore rivers to healthy conditions should humanity abandon it's awful fixation on so called "renewable energy," or by removing uranium as a constituent of "NORM" (Naturally Occurring Radioactive Materials) from drinking water. (I pointed to a case in which this issue presents itself recently in this space: Large-Scale Uranium Contamination of Groundwater Resources in India.

In connection with this, I have been working to wrap my head around the international scientific consensus on the thermodynamic equation of state for seawater, TEOS 10, from which one can calculate that the high energy density of uranium (transmuted into plutonium). The extremely high energy density of plutonium makes the infinite sustainability of uranium supplies from ocean (and fresh) water feasible, even if all the energy inputs required to effect it come from fission itself.

But consideration of the equation of state of seawater, and the environmental risks and benefits of desalination will have to wait for another time.

I hope you're having a pleasant weekend.

Science Paper: Zero Emission Hopes Are Ignoring Some Intractable Issues.

The paper from the primary scientific literature to which this post refers is this one: Net-zero emissions energy systems (Davis et al Science 29 Jun 2018: Vol. 360, Issue 6396, eaas9793)

The paper is a review article, and the large body of authors come from a wide array of academic and government institutions.

Some years back, in a blog post elsewhere, I quoted this text from a paper in Nature Geoscience:

Source: Olivier Vidal, Bruno Goffé and Nicholas Arndt, Nature Geoscience 6, 894–896 (2013). The source references for the calculations are found in the supplementary information for this paper.

In terms of energy, the WWF prediction for wind energy, 25,000 TWh, amounts to about 90 exajoules of energy. As of 2016, the world was consuming 587 exajoules, so this cannot be called a "zero emissions" program. WWF, by the way, stands for "World Wildlife Fund." One would hope, naively I'm sure, that the current membership of the "World Wildlife Fund" is not hoping for this outcome, since the rendering of all our wild spaces into industrial parks for the wind industry will surely render many species of bat completely extinct, and many species of birds as well. But the reality is that they are hoping for this horror, since most putative "environmental" organizations these days are largely funded by bourgeois people who can't, or refuse to think.

The good news is that he wind industry will never produce 90 exajoules of energy in a year, but the bad news is that a lot of time, money, and even more regrettably, wild spaces and wildlife will be destroyed trying to make what has not worked, is not working and will not work, work.

In my blog post, building on this paper, I wrote:

The situation with respect to aluminum is more problematic. According to the World Aluminum Institute, in 2014, the world produced 53,034,000 MT of aluminum.[20] Thus over the next 35 years, about the total of 7 years of production of this metal, at current levels, would be needed to construct the wind plants that the WWF happily predicts.

I noted that at the time of that writing, that the entire wind industry on the entire planet after half a century of wild eyed cheering for it was only capable of producing 67% of the electricity required to produce aluminum in a typical year.

The authors of the paper cited at the very beginning of this post note that while (some forms) of electricity can conceivably be decarbonized, other forms are exceedingly difficult to imagine addressing.

They post a photograph of a steel operation, and let's be clear about something, OK? Steel making is coal dependent, irrespective of all the delusional nonsense one hears in which it is claimed that coal is dead. It's not even close. In the 21st century, coal has been the fastest growing form of energy production on the planet as a whole, growing roughly more than 9 times as fast (by 60 exajoules per year since the year 2000) as the hyped, expensive, and useless solar and wind industries (which grew by a little less than 7 exajoules since the year 2000).

IEA 2017 World Energy Outlook, Table 2.2 page 79 (I have converted MTOE in the original table to the SI unit exajoules in this text.)

The photograph:

The caption:

Industrial processes such as steelmaking will be particularly challenging to decarbonize. Meeting future demand for such difficult-to-decarbonize energy services and industrial products without adding CO2 to the atmosphere may depend on technological cost reductions via research and innovation, as well as coordinated deployment and integration of operations across currently discrete energy industries.

The caption is, by the way, pure optimism.

The introductory text from the paper:

Some commentary on this paragraph: There is nothing "straight forward" about generating electricity using "solar and wind." If there were, they would be significant forms of energy on this planet given decades of mindless enthusiasm they've generated, never mind the trillions of dollars squandered on them. Moreover, to the extent that the effort is made to make them significant, again, to beat a horse or maybe to behead a hydra, the effort will represent an environmental disaster.

Please note that some of the authors come from NREL though, and thus this de rigueur claim is unsurprising.

Nor is it true that nuclear energy is nonrenewable, at least to the extent that anything is "renewable," to use the magic if abused word. It can be shown, literally with thousands of citations and appeal to a few facts that follow from them, that it is physically impossible for humanity to consume all of the uranium on earth. Thus the fuel is can no more be depleted than sunlight; it is the energy conversion device that matters in terms of cost and environmental sustainability.

According to this paper, there are six major categories of carbon emissions that are difficult to eliminate, totaling (based on their appeal to 2014 data) 9.2 billion metric tons out of 33.2 billion metric tons attributed to dangerous fossil fuels as of that year.

(These figures are undoubtedly higher in 2018 since we have been completely ineffective at reducing either worldwide energy consumption or the portion of it coming from dangerous fossil fuels, both of which are actually increasing, not decreasing.

They have a nice graphic explaining this:

The caption:

Difficult-to-eliminate emissions in current context.

(A and B) Estimates of CO2 emissions related to different energy services, highlighting [for example, by longer pie pieces in (A)] those services that will be the most difficult to decarbonize, and the magnitude of 2014 emissions from those difficult-to-eliminate emissions. The shares and emissions shown here reflect a global energy system that still relies primarily on fossil fuels and that serves many developing regions. Both (A) the shares and (B) the level of emissions related to these difficult-to-decarbonize services are likely to increase in the future. Totals and sectoral breakdowns shown are based primarily on data from the International Energy Agency and EDGAR 4.3 databases (8, 38). The highlighted iron and steel and cement emissions are those related to the dominant industrial processes only; fossil-energy inputs to those sectors that are more easily decarbonized are included with direct emissions from other industries in the “Other industry” category. Residential and commercial emissions are those produced directly by businesses and households, and “Electricity,” “Combined heat & electricity,” and “Heat” represent emissions from the energy sector. Further details are provided in the supplementary materials.

The Nature Geosciences paper linked above notes, by the way, that so called "renewable energy" requires 15 times as much concrete per joule (or megajoule or gigajoule or exajoule) as an equivalent amount of energy from a nuclear plant. Arguably it is fairly straight forward to recover used steel and aluminum, and for that matter copper, although the processing (or better put, reprocessing) will require a significant energy input, but it going to be very difficult to recycle concrete sustainably. Any concrete squandered on off shore wind facilities will end up in less than 20 years (if the Danish data remains unchanged for the average lifetime of wind turbines) as little more than navigation hazards.

On concrete the authors write:

About 40% of the CO2 emissions during cement production are from fossil energy inputs, with the remaining CO2 emissions arising from the calcination of calcium carbonate (CaCO3) (typically limestone) (53). Eliminating the process emissions requires fundamental changes to the cementmaking process and cement materials and/or installation of carbon-capture technology (Fig. 1G) (54). CO2 concentrations are typically ~30% by volume in cement plant flue gas [compared with ~10 to 15% in power plant flue gas (54)], improving the viability of post-combustion carbon capture. Firing the kiln with oxygen and recycled CO2 is another option (55), but it may be challenging to manage the composition of gases in existing cement kilns that are not gas-tight, operate at very high temperatures (~1500°C), and rotate (56).

I have some criticisms of the statements here as well, but will spare the reader.

The authors spend a considerable amount of time discussing hydrogen and hydrogenation products as energy storage tools. All energy storage wastes energy; it is a physical requirement of the laws of the universe which are not subject to repeal.

They spend a fair amount of time discussing electrolysis, which is probably the best known, but also one of the worst means of generating hydrogen, although there are some very high temperature (supercritical water) forms of electrolysis that can achieve a mildly reasonable energy efficiency in terms of loss to waste. (For example at Neodymium Nickelate electrodes in solid state oxide fuel cells.)

They produce this graphic to discuss the costs of energy production using various technologies.

The caption:

A) The energy density of energy sources for transportation, including hydrocarbons (purple), ammonia (orange), hydrogen (blue), and current lithium ion batteries (green). (B) Relationships between fixed capital versus variable operating costs of new generation resources in the United States, with shaded ranges of regional and tax credit variation and contours of total levelized cost of electricity, assuming average capacity factors and equipment lifetimes. NG cc, natural gas combined cycle. (113). (C) The relationship of capital cost (electrolyzer cost) and electricity price on the cost of produced hydrogen (the simplest possible electricity-to-fuel conversion) assuming a 25-year lifetime, 80% capacity factor, 65% operating efficiency, 2-year construction time, and straight-line depreciation over 10 years with $0 salvage value (29). For comparison, hydrogen is currently produced by steam methane reformation at costs of ~$1.50/kg H2 (~$10/GJ; red line). (D) Comparison of the levelized costs of discharged electricity as a function of cycles per year, assuming constant power capacity, 20-year service life, and full discharge over 8 hours for daily cycling or 121 days for yearly cycling. Dashed lines for hydrogen and lithium-ion reflect aspirational targets. Further details are provided in the supplementary materials.

Some commentary is necessary here:

The costs reported in graphic B here are definitely misleading, although they are so in the way that almost all such representations are misrepresented. For one thing they exclude external costs, the costs to human health, animal health, and environmental health. Secondly they isolate two forms of so called "renewable energy," solar and wind from the costs associated with making power available when they themselves are unavailable. This is the cost of natural gas, since the solar and wind industries are completely dependent on access to dangerous natural gas to operate. As I often note, if it requires two separate systems to do what one system can do alone, the costs of each accrues to the other, and this is true of both external and internal costs. Thirdly, the cost associated with nuclear's variable cost assumes that current technology, which involves (questionably) mining and enriching uranium, i.e. in non-breeding situations. This is not the way to make nuclear energy sustainable. We have already mined enough uranium and enough thorium (the latter being dumped as "waste" by the wind industry and thus not mined for its larger and cleaner energy value.)

I have a remark on graphic D, concerning grid scale storage. One of the storage mechanisms here is compressed air. The way that air compression loses energy is that compressing air causes it to heat. If this heat is lost - and it almost always is - when the air expands it will cool and the pressure will drop, reducing the effectiveness of the turbine.

There is an energy device that uses compressed air: The jet engine. In a jet engine the air is reheated using a dangerous fossil fuel. There are papers that propose to store wind energy as compressed air and use dangerous natural gas to reheat it.

Personally I believe if we must store energy - and I'm not sure we must - the most reliable and sustainable way to do so would be compressed air. Arguably, although I will not discuss this here, such processing of air could be coupled with cleaning the air, since the types and volumes of very dangerous air pollutants are increasingly present in our atmosphere, including but not limited to carbon dioxide.

There are options for avoiding the need for dangerous fossil fuels for compressed air storage. This would involve the use of waste heat, plenty of which is available. There are other options as well using materials often (incorrectly) defined as waste.

But that's for another time.

Have a happy 4th.

A few minutes after the Germans...John Abercrombie.

Hot Cracks and Addressing Questions in the Origin of Life.

I spent part of my day yesterday reading about cracks in two ways, first in thinking about fracture toughness in silicon nitride, a cool material, which is not the subject of this post, and then about the effect of heat flowing through a crack to cause the polymerization of nucleotides into nucleic acids, which is the subject of this post.

I described my renewed interest in this topic here: Open source paper on "Defining Life."

Over the weekend, I found myself thinking about the anti-entropy that life is, and went poking around in the library.

Here's a cool paper I found on exactly that subject, the difficult case or the origin of nucleic acids, since many people postulate that life arose from an "RNA world:" Heat flux across an open pore enables the continuous replication and selection of oligonucleotides towards increasing length (Moritz Kreysing†‡, Lorenz Keil‡, Simon Lanzmich‡ and Dieter Braun, Nature Chemistry volume 7, pages 203–208 (2015))

Entropy is discussed in the introduction:

It has been known since Spiegelman’s experiments in the late 1960s11 that, even if humans assist with the assembly of an extracellular evolution system, genetic information from long nucleic acids is quickly lost. This is because shorter nucleic acids are replicated with faster kinetics and outcompete longer sequences. If mutations in the replication process can change the sequence length, the result is an evolutionary race towards ever shorter sequences.

In the experiments described here we present a counterexample. We demonstrate that heat dissipation across an open rock pore, a common setting on the early Earth12 (Fig. 1b), provides a promising non-equilibrium habitat for the autonomous feeding, replication and positive length selection of genetic polymers...

...Here we extend the concept to the geologically realistic case of an open pore with a slow flow passing through it. We find continuous, localized replication of DNA together with an inherent nonlinear selection for long strands. With an added mutation process, the shown system bodes well for an autonomous Darwinian evolution based on chemical replicators with a built-in selection for increasing the sequence length. The complex interplay of thermal and fluid dynamic effects, which leads to a length-selective replication (Fig. 1c, (1)–(4)), is introduced in a stepwise manner.

The caption:

The authors take dilute short DNA fragments and drive the through a crack which has a temperature gradient on either end, the direction of flow being from hot to cold.

Here's a schematic picture from the paper:

A convective flow cycles the growing nucleic acid chain, and the overall flow determines the size of the DNA that exits from the system, and the heat provides the energy required for sequence replication:

The famous PCR technique, albeit a process using a thermally stable enzyme as a catalyst, also relies on heat for replication - the authors do some PCR work in their experiments.

However in this case, the enzyme is omitted, and the reaction takes place via cycling through thermal gradients.

Another picture:

The caption:

Another figure shows the effect of flow rate on strand length:

Finally "size selection habitats" are shown:

The caption:

The authors write:

...and in their conclusion state:

In another paper, not cited here that I encountered this weekend, John D. Sutherland, who has done very exciting work demonstrating a potential path for sugar containing phosphorylated sugars to arise out of simple molecules, used a Churchillian phrase to discuss where we are with explaining the generation of life from prebiotic very simple molecules, saying that the science of the prebiotic generation of life has reached "the end of the beginning."

Fascinating stuff, I think.

I hope you're having a pleasant week thus far.

Open source paper on "Defining Life."

As I age and more and more come face to face with the inevitably of dying, I wonder more and more, having experienced the real beauty of being alive, of whence life came to be.

I have always wanted to read Schroedinger's famous book, "What is Life?" but probably will never find the time.

Nevertheless, I resolved to spend some time reading this review article, Prebiotic Systems Chemistry: New Perspectives for the Origins of Life (Kepa Ruiz-Mirazo†, Carlos Briones‡, and Andrés de la Escosura*§ Chem. Rev., 2014, 114 (1), pp 285–366), when, not far in, I came across the following text:

However, we do not aim to discuss here in detail the issue of defining life: the reader is referred to a special issue of the journal Origins of Life and Evolution of Biospheres,9 or to a comprehensive anthology of articles on this subject.10

When I see references I'd like to pick up, I always email them to myself for my library time, and I usually send the reference as a link to the paper when possible so I can use my library time efficiently.

But when I generated the link to reference 9, I found that it opened at home, and thus is open sourced.

It's 244 pages on the question of "What is life," updated from Shroedinger, 85 years ago.

If you're interested, here it is:

Defining Life: Conference Proceedings.

Perhaps I don't know what life it, other than whatever it, it is extraordinarily beautiful, even with the pain, and very much worth living.

Have a great weekend!

My kid is in France learning how to become a Brazilian, calling soccer "football" and complaining...

...about the "dirty tactics" the Swiss used against Brazil in the World Cup.

He shares his office in the lab with four Brazilians, who he says, speak a language that sort of is to Spanish what German is to English.

The Brazilians, he says, are "just like Americans."

After work, his new friends invite him to play "football."

Some day, he says, he'd like to spend time learning Portuguese.

Should I be concerned?

Scaling Graphene.

There's a lot being written about graphene these days. Graphene, for those who don't know, is a carbon allotrope that has the carbons bonded an a series of almost infinite series of fused hexagonal aromatic rings that make it planar. The neat thing about this allotrope is that it is exactly one atom thick. If it's thinker than one atom, it's graphite, most commonly experience by most people as pencil lead.

There are thousands of pictures on the internet. Here's an electron micrograph, out of the Los Alamos National Laboratory, of the stuff with resolution on an atomic scale:

Source Page of the Image.

Graphene is proposed to have many uses and if I actually read all the papers I've seen in which it appears in the title, I'd be able to discuss some of them intelligently, but frankly, I skip over a lot of these papers, quite possibly all of them in fact because I'm too interested in other stuff. Mostly I've just mused to myself about the stuff, particularly its oxide, which I imagined might be functionalized as an interesting carbon capture material, but well, there's lots and lots and lots of those. The problem is not discovering new carbon capture materials; the problem is utilizing them without creating carbon dioxide waste dumps that don't exist and, were they to exist, would be unacceptably dangerous to future generations, not that we care about future generations.

When my son was touring Materials Science Departments at various universities in both "informational" sessions and in "accepted students" forums the word graphene came up a lot. At one such session, we were introduced to a professor who was described as having developed a way to prepare "kg quantities" of graphene, and I meekly raised my hand and asked, "What does a 'kilogram of graphene actually mean?" If I was as rude as I sometimes am around here, I might have asked the question as "Isn't a kilogram of stacked graphene just graphite?"

But I wasn't. I didn't want to screw things up for my kid if he decided to go there. (He didn't.)

At another university, during an informational session for students who might apply, a graduate student, who was writing his thesis at the time, took an interest in my son and decided to give us a full tour of the department. Somehow I used (or muttered) the word "graphene" during the tour and he, a somewhat jaded guy with a decidedly sarcastic edge - my kind of guy - said, "Well, I'm sure it would be useful if they knew how to make it in useful quantities, but they don't."

My son did apply there, by the way, was accepted there, and is, in fact, going there, a wonderful university.

To my surprise I suddenly find myself interested in graphene though because of a recent lecture on a subject about which I know nothing but about which I am interested in finding about more, as I discussed last night in a post in this space: Topological Semimetals.

The paper I linked in that post has the following remark:

The prototype of a DSM is graphene. The “perfect” DSM has the same electronic structure of graphene; i.e., it should consist of two sets of linearly dispersed bands that cross each other at a single point. Ideally, no other states should interfere at the Fermi level.

Graphene is a "perfect DSM," a "perfect Dirac Semimetal."

And today in my library hour, what should happen but that I was to come across a paper that reports an approach to scaling up graphene.

The paper is here: Exfoliation of Graphite into Graphene by a Rotor–Stator in Supercritical CO2: Experiment and Simulation (Zhao et al, Ind. Eng. Chem. Res., 2018, 57 (24), pp 8220–8229)

I have been and am very interested in supercritical CO2, by the way. "Supercritical" refers to a substance that is neither a liquid nor a gas but exists in a state that has properties of both and can only exist above certain temperatures and pressures called respectively the "critical temperature" and, of course, "the critical pressure." The critical temperature of carbon dioxide is only a little above room temperature, which makes it a readily accessible material.

As my wont lately in this space when discussing scientific papers, I'll do some brief excerpts and invite you to look at the pictures, since whenever I decide whether or not to actually read a paper upon which I stumble (as opposed to a paper that I've sought for some reason), that's what I do, look at the pictures.

From the intro:

...The purpose of this work is to investigate the exfoliation mechanism of a rotor?stator mixer in supercritical CO2 by a combination of the experiment and CFD simulation and to make the optimal design of the rotor?stator mixer in terms of exfoliation efficiency for the potential industrial application.

CFD is computational fluid dynamics if you didn't know.

Now some pictures...

Here's a schematic of things they evaluate by computer simulation:

The caption:

Rotor design and (low, if higher than usual where graphene is concerned) yields:

The caption:

A little discussion of the mathematical physics of the situation:

2.2.1. Eulerian?Eulerian Two-Fluid Equations. Different phases were treated as interpenetration continuum. The conservation equations were solved simultaneously for each phase in the Eulerian framework. Then, the continuity equations for phase n (n = l for the liquid phase, s for the solid phase) can be expressed by

...

...

Some more cool math:

2.2.1. Eulerian?Eulerian Two-Fluid Equations. Different phases were treated as interpenetration continuum. The conservation equations were solved simultaneously for each phase in the Eulerian framework. Then, the continuity equations for phase n (n = l for the liquid phase, s for the solid phase) can be expressed by

At the same time, a granular temperature was introduced into the model:

...

...

Some simulation results:

The caption:

More simulation showing vessels and rotors:

The caption:

Figure 6. Stator and the contours of velocity and volume fraction in multiwall stator at 3000 rpm (A). The lateral view and the vertical view of the multiwall stator, (B) horizontal, and (C) perpendicular fluid flow pattern induced by multiwall stator; the graphite of volume fraction in (D) eighttooth stator and (E) multiwall stator.

Then they set about making themselves some graphene. It, along with graphene by other processes is pictured here:

The caption:

NMP is N-methylpyrollidine. I've used it, I'm still alive but know nothing of its toxicology. If it turns out to be toxic, we can use it to make solar cells, whereupon it will be declared "green," no matter what it's effect on living things.

Some more electron micrographs:

The caption:

Nevertheless the yields are not spectacular enough to make industrial application straight forward, although if it turns out that graphene solar cells are "great" we can bet the planetary atmosphere on the expectation that they'll be available "by 2050" when I - happily for many people who don't find me amusing - will be dead.

The caption:

Some concluding remarks:

Love that percent talk!

Interesting, I think, although I think that graduate student had a point.

I hope your Friday will be pleasant and productive.

Profile Information

Gender: MaleCurrent location: New Jersey

Member since: 2002

Number of posts: 33,512